AI agents as attack pivots: the new lateral movement

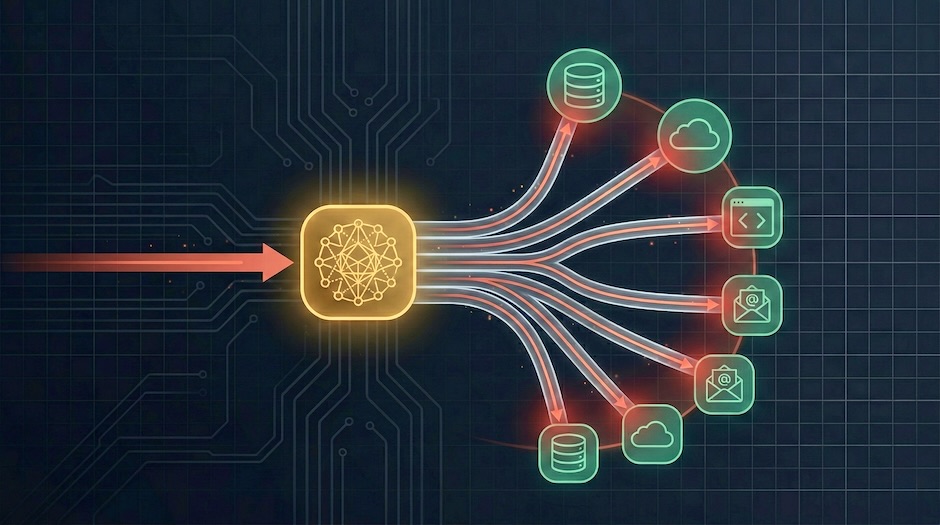

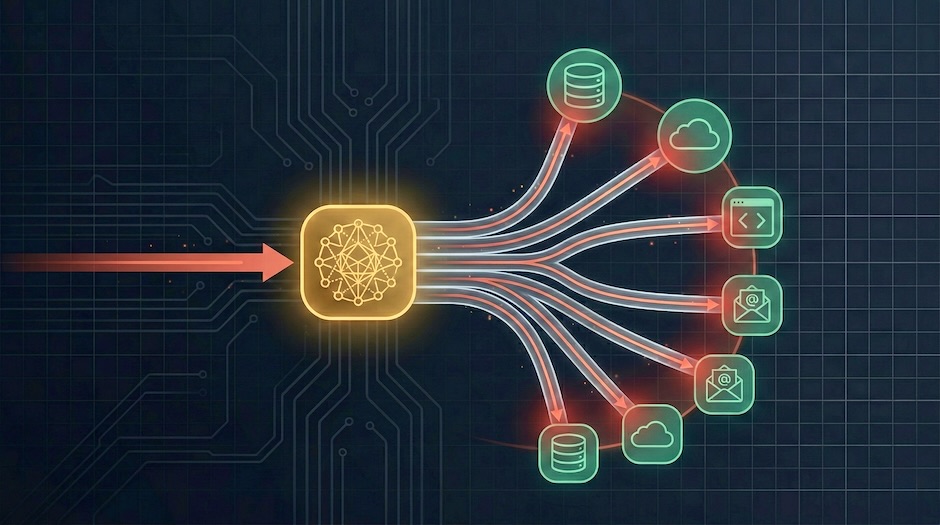

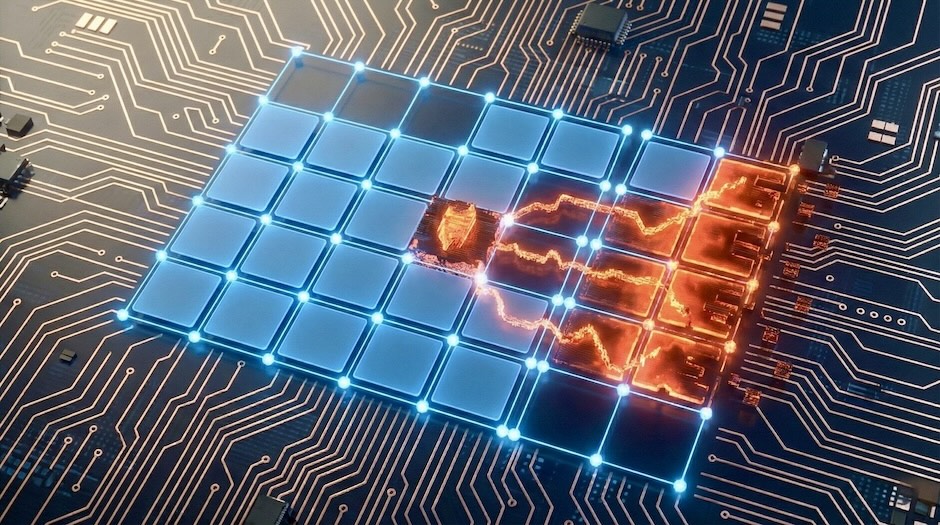

AI agents create a third class of lateral movement, bridging previously isolated systems through natural language, tool access, and execution autonomy.

As a speaker with international conference experience (Black Hat Arsenal USA, DEF CON AppSec Village USA, RSA Conference USA, Oracle JavaOne, Black Hat Arsenal Europe, Black Hat Arsenal Asia, DeepSec, BruCON, OWASP AppSecEU, OWASP AppSec Days, DevOpsCon Berlin/Munich/London/Singapore, JAX, Heise DevSec, Heise Sec-IT, Heise Herbstcampus, RuhrSec, JCon, JavaLand, Internet Security Days, IT-Tage Frankfurt, OOP, and others) I’m definitely enjoying to speak, present keynotes, and train about IT-Security topics.

Manual penetration testing of web applications, APIs, and mobile apps — including business logic flaws and chained attack paths.

Cloud security audit combining CIS benchmarks with pentesting experience to find exploitable misconfigurations in your AWS, Azure, or GCP setup.

Security review of Kubernetes and OpenShift platforms covering RBAC, pod security, container images, and CIS benchmark compliance.

AI agents create a third class of lateral movement, bridging previously isolated systems through natural language, tool access, and execution autonomy.

How attackers plant instructions targeting agentic AI systems today that execute weeks later, and the defense architecture that stops them.

Why your sanitized user queries don’t protect you when the threat enters through your knowledge base.